Getting Started with Python for Data Science: A Beginner’s Guide

Introduction

Data science has been rising in importance in recent years and with the Artificial Intelligence now became the centre stage of technological advancement. It seems that data science has become one of the fundamentals for new opportunities, career advancement with tremendous upside. What is data science? You may ask, data science is the subject that enables the use of statistics and the vast data we collected and find out usable insights from the pile to data. It is a method for us to uncover the secrets around the world. This informs us on how we do things, or what we should improve on and other knowledge that are not previously known. With the power of data, we can uncover some patterns on how things work. Within the data science ecosystem, Python is one of the important programming languages along with R and some others. Python is common across various applications and data science applications are one of the most common usages. Due to plenty of usage of python in data science, there are a numerous python packages suitable for data science applications and with the support of the python community, it enables new learners to pick up the language. Let’s dive right in on how to start your first step in data science and I will be introducing some basics on python environment, python libraries, and a short project that we can apply on.

Setting up your python environment

The first step in starting your data science journey would be installing python. Actually there are multiple ways to get python installed to your computer. Python can be downloaded from various locations. You can download it from Python Software Foundation. This is the official of python and will have the latest python updates. Apart from getting python from this source. Another common way would be getting a distribution from Anaconda. Anaconda is the largest open source distribution for data science. Anaconda is a environment manager for python, which enables you to have multiple python environments, as in having many containers which you can have multiple versions of python in each of them. You can have one python environment for data science and installed with data science packages, and you can have another python environment for non-data science purposes.

| Data Types | ||

|---|---|---|

| Data Type Categories | Types | Explanation |

| Numeric | Int | This type only permits of storing integers |

| Float | This type can store decimal numbers | |

| Complex | This type can have imaginary numbers | |

| Text | String | This can hold text values any text and characters |

| Boolean | This can hold “True” or “False” | |

| Sequence | Lists | This is an ordered collection of items. |

| Tuples | This enables ordered immutable collection of items | |

| Ranges | This holds a sequence of numbers | |

| Set | Set | This hold a unordered unique items. There are not duplicate values within the list |

| Frozenset | Immutable sets | |

| Mapping | Dictionary | There is a key and a value pair and it is characterised by having brackets like “{}” and also having a colon to separate key and a value. |

These are the basic formation for python. Apart from python data types there are syntax, “grammar”, which would be important to use python. Here are some of those tips on using python.

Indentations are used to separate codes into different sections.

Indentations are used to separate codes into different sections. Especially when creating functions, and various conditions. Hence you would need to have indentations. When creating indentations it is recommended to have 4 spaces as it is recommended by the PEP 8 Style Guide for Python. As this will be more visually distinguishable and easier to separate various blocks of code.

Essential Python libraries for data science

Various Python libraries are made available for data science. Here are some of them. First, there is NumPy, then there is Pandas and thirdly there is Matplotlib and Sci-Kit Learn.

Importing Python libraries is simple. Here is a demonstration which includes below. The keyword “import” is used for importing Python libraries and then using “as” will enable giving an alias for the library, the benefit of using an alias would be to reduce the number of characters you would need to type, which would be beneficial to when you need typing the package name constantly.

Import numpy as np

NumPy

Numpy is one of the libraries commonly used in data science and it is used to process high-level mathematics, especially for arrays. But also linear algebra, various transforms, and matrices which would be essential for advanced manipulation of data.

Pandas

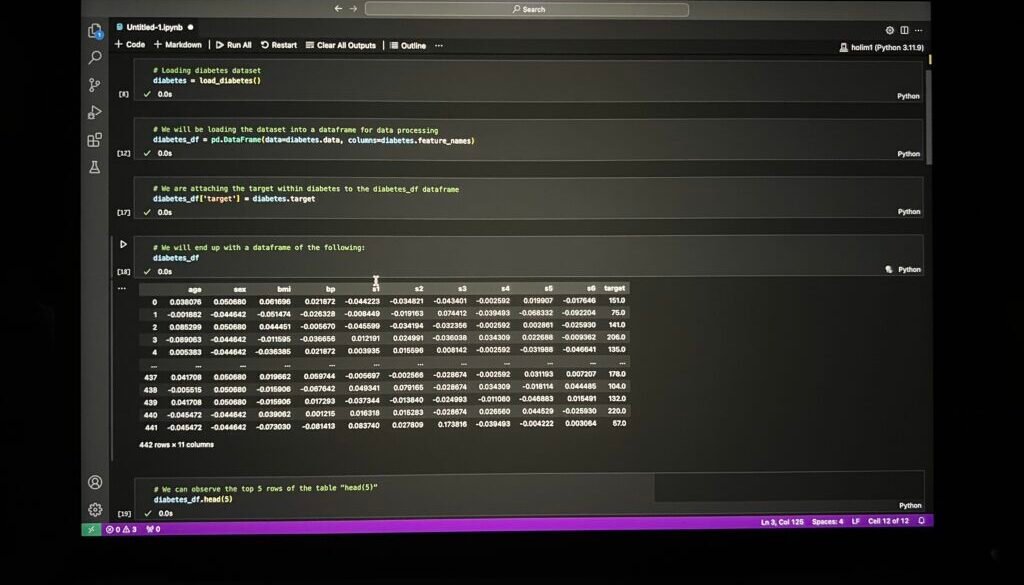

Pandas is a library that manipulates datasets, it provides various functions for data preprocessing, cleaning, and data wrangling. The benefits of pandas cannot be stressed enough and with such powerful functionality. Pandas deals with what we call dataframes which simply are tables with headers and values contained in every cell. Manipulation of dataframes allows for treating missing data, and data transformation. As we say, the quality of your data would determine the quality of your model, having Pandas can make your data rid of errors and noise which otherwise would affect the result of the training model. Pandas enable us to import various files that contain data including .csv files, excel files, tab-separated values, JSON files, SQL databases, Matlab files, and some more. These enable pandas to work with all these files for data analysis.

Matplotlib

Matplotlib is a comprehensive package enabling data plotting and visualization. This package allows you to produce a wide range of graphs and plots from pairwise data to distributions to 3D plots. It also enables you to customize the feel and look of the graphs and become more appealing to readers. Apart from generating eye-catching plots, it also provides a foundation for you to produce interactive plots from the data, expanding the visualization of the graphs you have generated. But the most important point would be it compatible with other Python libraries that we use constantly in Data Science, especially NumPy, Pandas, and others. This compatibility would provide a strong foundation for data science endeavors.

Scikit Learn

Sci-Kit Learn is an open-source package that builds on above mentioned packages and provides efficient tools to implement data preprocessing, various machine learning algorithms, and model evaluation. It enables you to focus more on the application side of Data Science and Machine Learning and less on coding the intricate components of each of the training models. If you want to create applications for machine learning, it is a great way to dive into and start coding. You will be able to find this package proves to cover a wide range of use cases, creating a pipeline for application and further extend from its original functionalities.

Simple Data Science Project

# Let's start with a simple data science project

from sklearn.datasets import load_iris #Importing the Iris dataset from sklearn.datasets

from sklearn.linear_model import LogisticRegression # We are using logistic regression a training model

from sklearn.model_selection import train_test_split #Algorithm to split the dataset

from sklearn.metrics import accuracy_score #We want to know the accuracy of our model

#Load the iris model

iris = load_iris() #we put the iris data into "iris"

X, y = iris.data, iris.target #we assign iris's data (iris.data) into X and iris's target into y

#Split the data into training and testing sets

#You want the test size to 20% and allowing you to have more data to train on, you can set any numerical random state

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size = 0.2, random_state = 42)

#Create and train the logistic regression model

model = LogisticRegression()

model.fit(X_train, y_train)

#Evaluate the model on the test set

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy: .2f}")

Next Steps and Resources

Hope that everyone can follow through this article and able to gain some knowledge in data science and would love to keep on learning it. For new beginners, I would recommend to start with familiarizing yourself with python syntax and the uses of various conditions, loops which there are plenty of resources available to use for free. I would like to recommend some of the resources that have helped me along the way in learning data science.

The first resource would be W3Schools. It contains various aspects of python usage that not limited to the foundations of pythons but also the usage of numpy, pandas and other packages which will prove useful in your future investigations.

The second resource to use would be geeksforgeeks.org which provides more in depth examples for in data science, it will show you some of the best practices and it has plenty of examples that can guide you along the way.

The third resource is not a website yet I think it would help tremendously if used correctly, which is the usage of Large Language Models such as ChatGPT and other language models. As it turns out, these language models are well trained in programming including python and therefore you can ask them to generate exercises for you to train on and at the same time you can provide them with answers. The benefit would be they can explain to you whether such codes are correct solutions and whenever you have any questions, you can ask these models to explain those codes to you so that you can understand why those codes work or why they fail. These kind of instant feedback are necessary for improving your coding skills and would help you improve in no time.

The most important thing above all would be to have enough practice that enables you to train up your muscle memory of Python’s syntax or the structure of doing data science.

Conclusion

Here in this short introduction, we have covered what is needed to started the journey in data science. I hope this article successfully show you the ropes of starting data science with the installation of python, and showing you some of important when you are dealing with data, there are many more packages that can be used when dealing with data analysis. Also, I have demonstrated an example from dealing with data and then generating a prediction model, and alas from resources that you can learn more. With practice you will be standing on solid ground in data science and learning new techniques here and there and ultimately improve decision making. Onwards in to the fun world of data science!